Publications

This list may not be up to date. For the most current publications, please refer to my Google Scholar profile.

2025

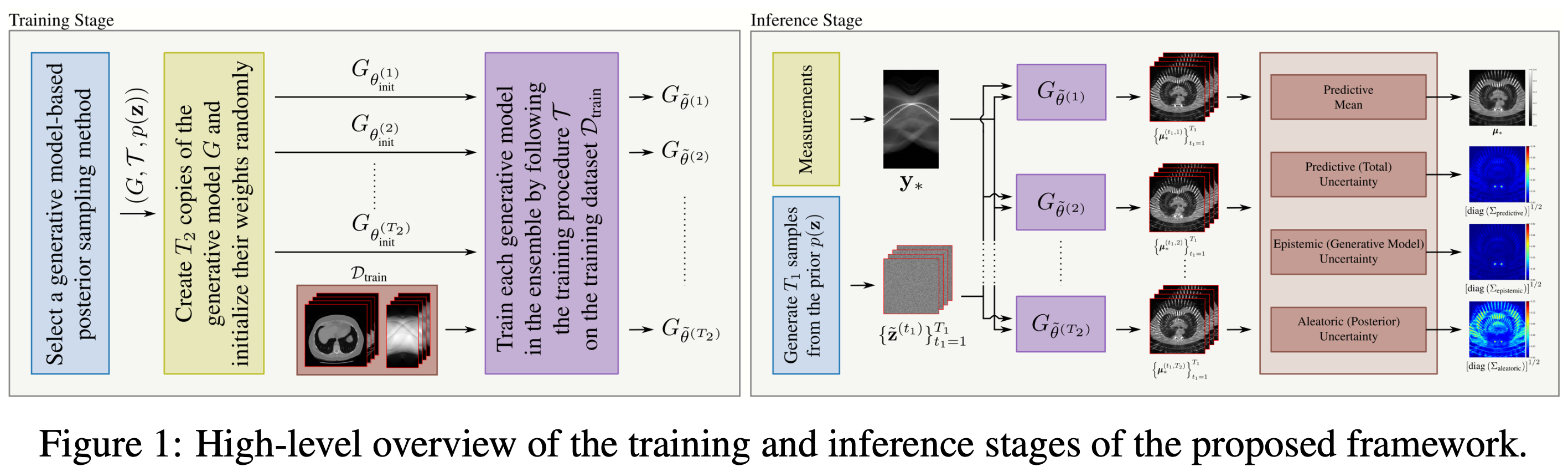

- ArXivConformalized Generative Bayesian Imaging: An Uncertainty Quantification Framework for Computational ImagingCanberk Ekmekci and Mujdat CetinIn ArXiv, 2025

Uncertainty quantification plays an important role in achieving trustworthy and reliable learning-based computational imaging. Recent advances in generative modeling and Bayesian neural networks have enabled the development of uncertainty-aware image reconstruction methods. Current generative model-based methods seek to quantify the inherent (aleatoric) uncertainty on the underlying image for given measurements by learning to sample from the posterior distribution of the underlying image. On the other hand, Bayesian neural network-based approaches aim to quantify the model (epistemic) uncertainty on the parameters of a deep neural network-based reconstruction method by approximating the posterior distribution of those parameters. Unfortunately, an ongoing need for an inversion method that can jointly quantify complex aleatoric uncertainty and epistemic uncertainty patterns still persists. In this paper, we present a scalable framework that can quantify both aleatoric and epistemic uncertainties. The proposed framework accepts an existing generative model-based posterior sampling method as an input and introduces an epistemic uncertainty quantification capability through Bayesian neural networks with latent variables and deep ensembling. Furthermore, by leveraging the conformal prediction methodology, the proposed framework can be easily calibrated to ensure rigorous uncertainty quantification. We evaluated the proposed framework on magnetic resonance imaging, computed tomography, and image inpainting problems and showed that the epistemic and aleatoric uncertainty estimates produced by the proposed framework display the characteristic features of true epistemic and aleatoric uncertainties. Furthermore, our results demonstrated that the use of conformal prediction on top of the proposed framework enables marginal coverage guarantees consistent with frequentist principles.

2024

- NeurIPS Workshops

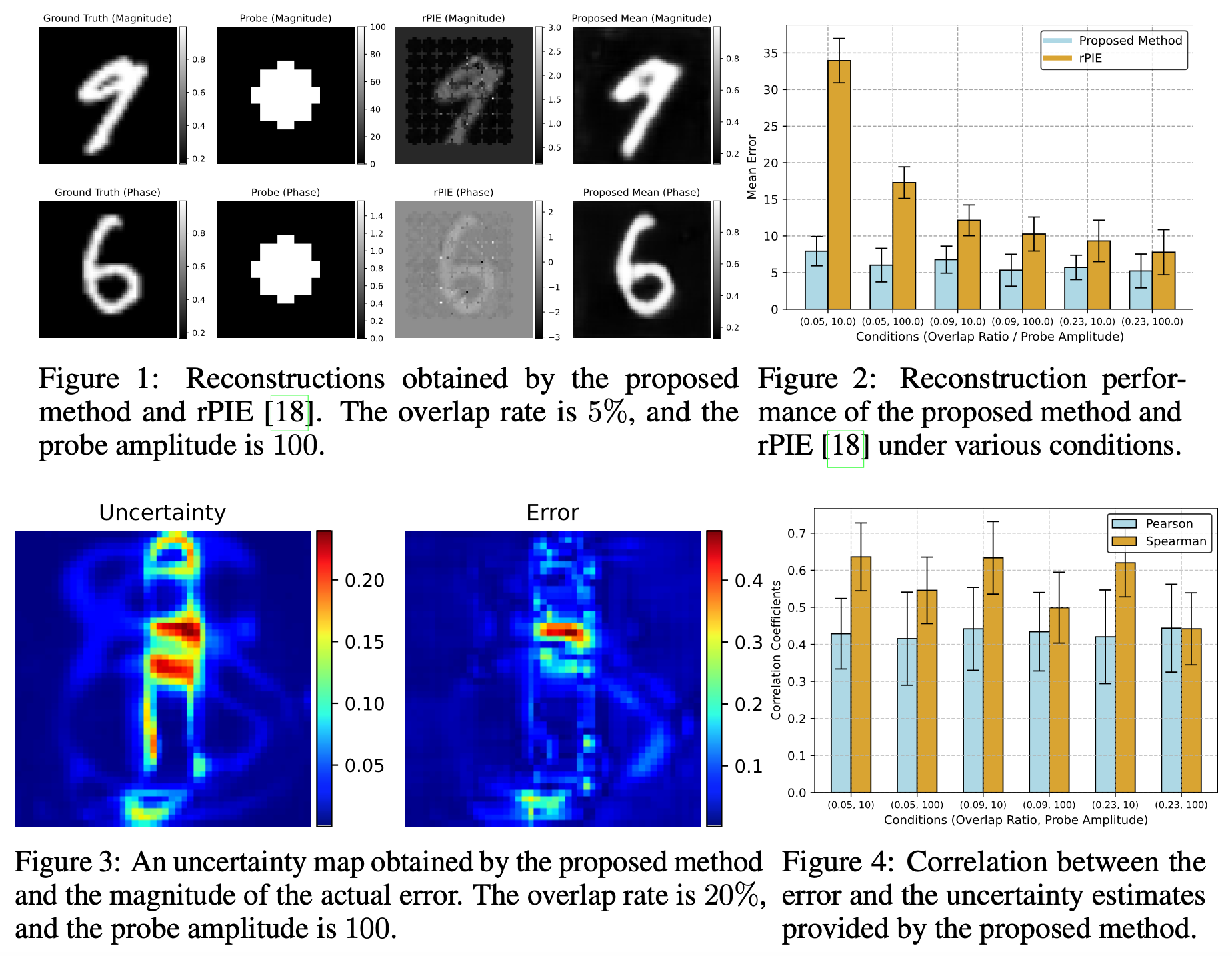

Integrating Generative and Physics-Based Models for Ptychographic Imaging with Uncertainty QuantificationCanberk Ekmekci, Tekin Bicer, Zichao (Wendy) Di, and 2 more authorsIn Machine Learning and the Physical Sciences Workshop at NeurIPS 2024, 2024

Integrating Generative and Physics-Based Models for Ptychographic Imaging with Uncertainty QuantificationCanberk Ekmekci, Tekin Bicer, Zichao (Wendy) Di, and 2 more authorsIn Machine Learning and the Physical Sciences Workshop at NeurIPS 2024, 2024Ptychography is a scanning coherent diffractive imaging technique that enables imaging nanometer-scale features in extended samples. One main challenge is that widely used iterative image reconstruction methods often require significant amount of overlap between adjacent scan locations, leading to large data volumes and prolonged acquisition times. To address this key limitation, this paper proposes a Bayesian inversion method for ptychography that performs effectively even with less overlap between neighboring scan locations. Furthermore, the proposed method can quantify the inherent uncertainty on the ptychographic object, which is created by the ill-posed nature of the ptychographic inverse problem. At a high level, the proposed method first utilizes a deep generative model to learn the prior distribution of the object and then generates samples from the posterior distribution of the object by using a Markov Chain Monte Carlo algorithm. Our results from simulated ptychography experiments show that the proposed framework can consistently outperform a widely used iterative reconstruction algorithm in cases of reduced overlap. Moreover, the proposed framework can provide uncertainty estimates that closely correlate with the true error, which is not available in practice.

- GJIOn the Detection of Upper Mantle Discontinuities with Radon-Transformed Receiver Functions (CRISP-RF)Tolulope Olugboji, Ziqi Zhang, Steve Carr, and 2 more authorsGeophysical Journal International, Feb 2024

Seismic interrogation of the upper mantle from the base of the crust to the top of the mantle transition zone has revealed discontinuities that are variable in space, depth, lateral extent, amplitude and lack a unified explanation for their origin. Improved constraints on the detectability and properties of mantle discontinuities can be obtained with P-to-S receiver function (Ps-RF) where energy scatters from P to S as seismic waves propagate across discontinuities of interest. However, due to the interference of crustal multiples, uppermost mantle discontinuities are more commonly imaged with lower resolution S-to-P receiver function (Sp-RF). In this study, a new method called CRISP-RF (Clean Receiver-function Imaging using SParse Radon Filters) is proposed, which incorporates ideas from compressive sensing and model-based image reconstruction. The central idea involves applying a sparse Radon transform to effectively decompose the Ps-RF into its underlying wavefield contributions, that is direct conversions, multiples, and noise, based on the phase moveout and coherence. A masking filter is then designed and applied to create a multiple-free and denoised Ps-RF. We demonstrate, using synthetic experiment, that our implementation of the Radon transform using a sparsity-promoting regularization outperforms the conventional least-squares methods and can effectively isolate direct Ps conversions. We further apply the CRISP-RF workflow on real data, including single station data on cratons, common-conversion-point stack at continental margins and seismic data from ocean islands. The application of CRISP-RF to global data sets will advance our understanding of the enigmatic origins of the upper mantle discontinuities like the ubiquitous mid-lithospheric discontinuity and the elusive X-discontinuity.

2023

- NeurIPS Workshops

Quantifying Generative Model Uncertainty in Posterior Sampling Methods for Computational ImagingCanberk Ekmekci and Mujdat CetinIn NeurIPS 2023 Workshop on Deep Learning and Inverse Problems, Feb 2023

Quantifying Generative Model Uncertainty in Posterior Sampling Methods for Computational ImagingCanberk Ekmekci and Mujdat CetinIn NeurIPS 2023 Workshop on Deep Learning and Inverse Problems, Feb 2023The idea of using generative models to perform posterior sampling for imaging inverse problems has elicited attention from the computational imaging community. The main limitation of the existing generative model-based posterior sampling methods is that they do not provide any information about how uncertain the generative model is. In this work, we propose a quick-to-adopt framework that can transform a given generative model-based posterior sampling method into a statistical model that can quantify the generative model uncertainty. The proposed framework is built upon the principles of Bayesian neural networks with latent variables and uses ensembling to capture the uncertainty on the parameters of a generative model. We evaluate the proposed framework on the computed tomography reconstruction problem and demonstrate its capability to quantify generative model uncertainty with an illustrative example. We also show that the proposed method can improve the quality of the reconstructions and the predictive uncertainty estimates of the generative model-based posterior sampling method used within the proposed framework.

- EI-COIMG

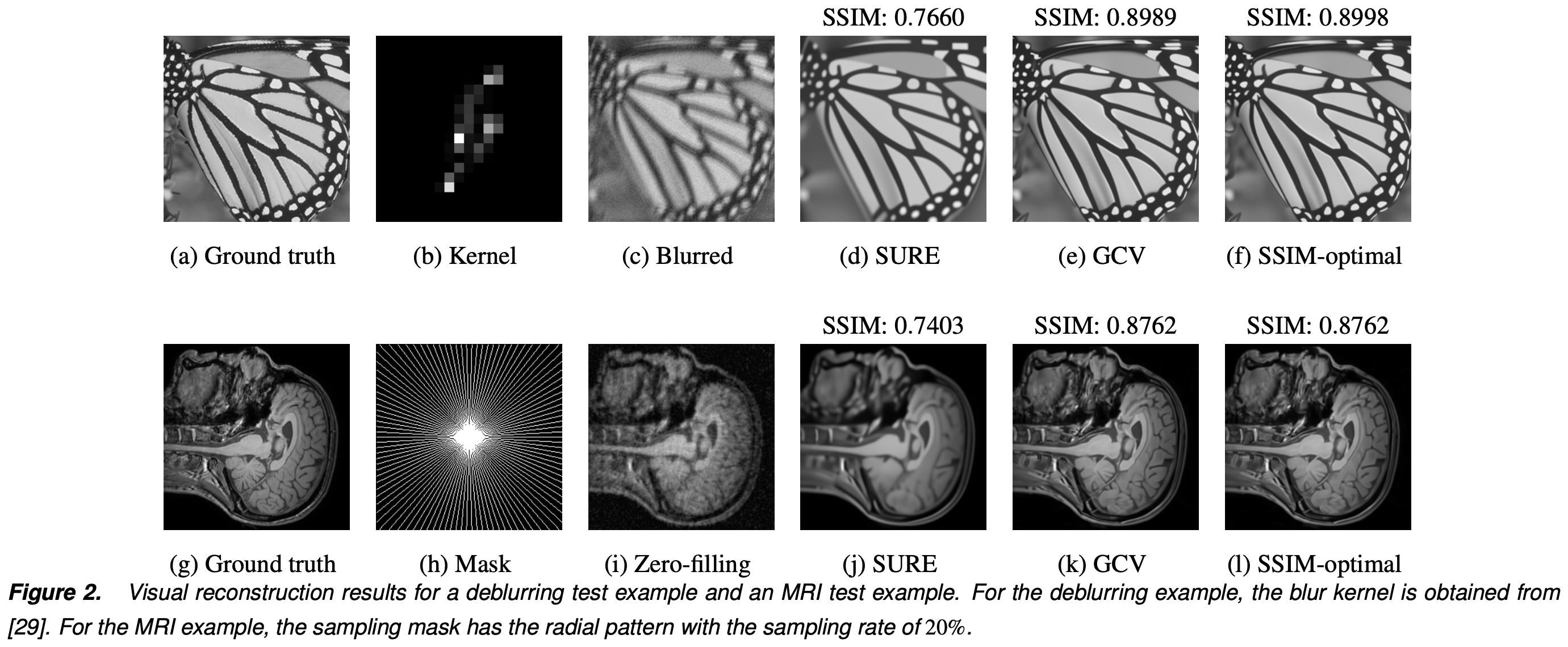

Automatic Parameter Tuning for Plug-and-Play Algorithms Using Generalized Cross Validation and Stein’s Unbiased Risk Estimation for Linear Inverse Problems in Computational ImagingCanberk Ekmekci and Mujdat CetinIn IS&T International Symposium on Electronic Imaging, Jan 2023

Automatic Parameter Tuning for Plug-and-Play Algorithms Using Generalized Cross Validation and Stein’s Unbiased Risk Estimation for Linear Inverse Problems in Computational ImagingCanberk Ekmekci and Mujdat CetinIn IS&T International Symposium on Electronic Imaging, Jan 2023We propose two automatic parameter tuning methods for Plug-and-Play (PnP) algorithms that use CNN denoisers. We focus on linear inverse problems and propose an iterative algorithm to calculate generalized cross-validation (GCV) and Stein’s unbiased risk estimator (SURE) functions for a half-quadratic splitting-based PnP (PnP-HQS) algorithm that uses a state-of- the-art CNN denoiser. The proposed methods leverage forward mode automatic differentiation to calculate the GCV and SURE functions and tune the parameters of a PnP-HQS algorithm automatically by minimizing the GCV and SURE functions using grid search. Because linear inverse problems appear frequently in computational imaging, the proposed methods can be applied in various domains. Furthermore, because the proposed methods rely on GCV and SURE functions, they do not require access to the ground truth image and do not require collecting an additional training dataset, which is highly desirable for imaging applications for which acquiring data is costly and time-consuming. We evaluate the performance of the proposed methods on deblurring and MRI experiments and show that the GCV-based proposed method achieves comparable performance to that of the oracle tuning method that adjusts the parameters by maximizing the structural similarity index between the ground truth image and the output of the PnP algorithm. We also show that the SURE-based proposed method often leads to worse performance compared to the GCV-based proposed method.

2022

- IEEE TCI

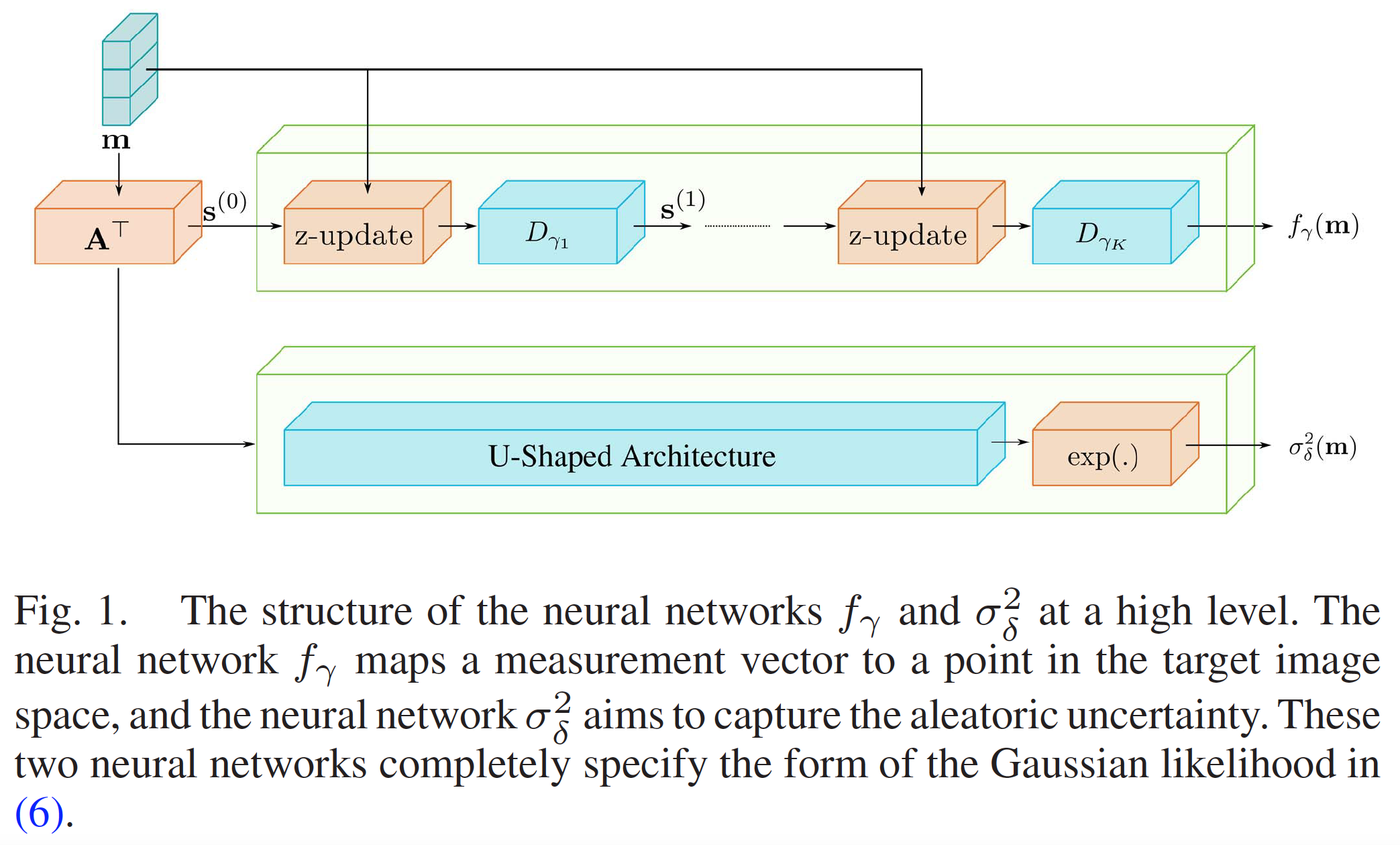

Uncertainty Quantification for Deep Unrolling-Based Computational ImagingCanberk Ekmekci and Mujdat CetinIEEE Transactions on Computational Imaging, Jan 2022

Uncertainty Quantification for Deep Unrolling-Based Computational ImagingCanberk Ekmekci and Mujdat CetinIEEE Transactions on Computational Imaging, Jan 2022Deep unrolling is an emerging deep learning-based image reconstruction methodology that bridges the gap between model-based and purely deep learning-based image reconstruction methods. Although deep unrolling methods achieve state-of-the-art performance for imaging problems and allow the incorporation of the observation model into the reconstruction process, they do not provide any uncertainty information about the reconstructed image, which severely limits their use in practice, especially for safety-critical imaging applications. In this article, we propose a learning-based image reconstruction framework that incorporates the observation model into the reconstruction task and that is capable of quantifying epistemic and aleatoric uncertainties, based on deep unrolling and Bayesian neural networks. We demonstrate the uncertainty characterization capability of the proposed framework on magnetic resonance imaging and computed tomography reconstruction problems. We investigate the characteristics of the epistemic and aleatoric uncertainty information provided by the proposed framework to motivate future research on utilizing uncertainty information to develop more accurate, robust, trustworthy, uncertainty-aware, learning-based image reconstruction and analysis methods for imaging problems. We show that the proposed framework can provide uncertainty information while achieving comparable reconstruction performance to state-of-the-art deep unrolling methods.

2021

- EI-COIMG

Model-Based Bayesian Deep Learning Architecture for Linear Inverse Problems in Computational ImagingCanberk Ekmekci and Mujdat CetinIn Proceedings of the IS&T International Symposium on Electronic Imaging: Computational Imaging XIX, Jan 2021

Model-Based Bayesian Deep Learning Architecture for Linear Inverse Problems in Computational ImagingCanberk Ekmekci and Mujdat CetinIn Proceedings of the IS&T International Symposium on Electronic Imaging: Computational Imaging XIX, Jan 2021We propose a neural network architecture combined with specific training and inference procedures for linear inverse problems arising in computational imaging to reconstruct the underlying image and to represent the uncertainty about the reconstruction. The proposed architecture is built from the model-based reconstruction perspective, which enforces data consistency and eliminates the artifacts in an alternating manner. The training and the inference procedures are based on performing approximate Bayesian analysis on the weights of the proposed network using a variational inference method. The proposed architecture with the associated inference procedure is capable of characterizing uncertainty while performing reconstruction with a model-based approach. We tested the proposed method on a simulated magnetic resonance imaging experiment. We showed that the proposed method achieved an adequate reconstruction capability and provided reliable uncertainty estimates in the sense that the regions having high uncertainty provided by the proposed method are likely to be the regions where reconstruction errors occur.

- ICCV Workshops

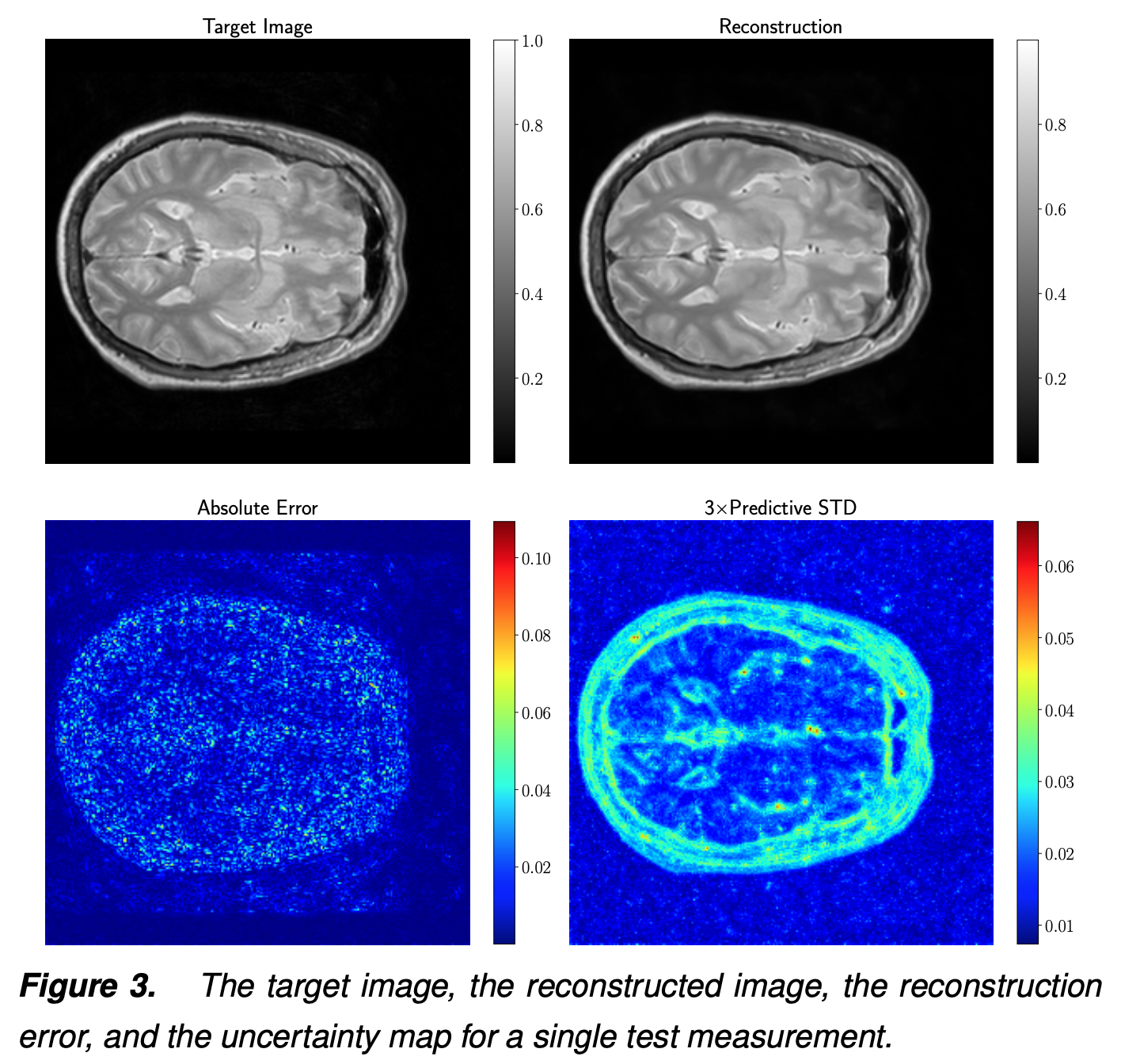

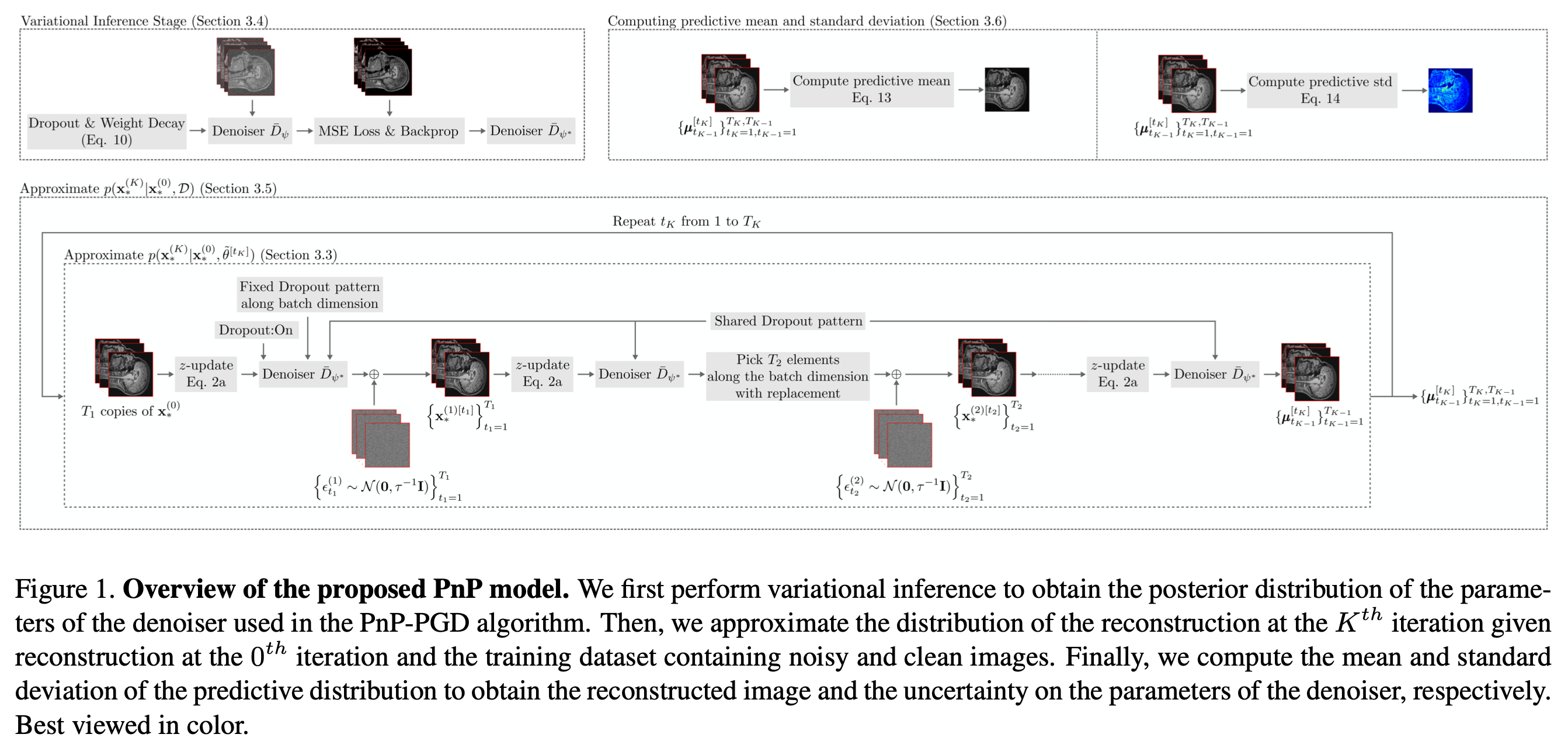

What Does Your Computational Imaging Algorithm Not Know?: A Plug-and-Play Model Quantifying Model UncertaintyCanberk Ekmekci and Mujdat CetinIn Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops, Oct 2021

What Does Your Computational Imaging Algorithm Not Know?: A Plug-and-Play Model Quantifying Model UncertaintyCanberk Ekmekci and Mujdat CetinIn Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops, Oct 2021Plug-and-Play is an algorithmic framework developed to solve image recovery problems. Thanks to the empirical success of convolutional neural network (CNN) denoisers, numerous Plug-and-Play algorithms utilizing CNN denoisers have been proposed to solve various image recovery tasks. Unfortunately, those Plug-and-Play algorithms lack representing the uncertainty on the parameters of CNN denoisers because their training procedure yields only a point estimate for the parameters of the CNN denoiser. In this paper, we present a novel Plug-and-Play model that quantifies the uncertainty on the parameters of the CNN denoiser. The proposed model places a probability distribution on the parameters of the CNN denoiser and carries out approximate Bayesian inference to obtain the posterior distribution of the parameters to characterize their uncertainty. The uncertainty information provided by the proposed Plug-and-Play model allows characterizing how certain the model is for a given input. The proposed Plug-and-Play model is applicable to a broad set of computational imaging problems, with the requirement that the data fidelity term is differentiable, and has a simple implementation in deep learning frameworks. We evaluate the proposed Plug-and-Play model on a magnetic resonance imaging reconstruction problem and demonstrate its uncertainty characterization capability.